There are many ways you can analyse your Google Analytics data. In this post I use GA Traffic Sources exported csv data to try out Incanter, Clojure and MongoDB. This post is part of

Loganis GAckup tools. All clojure codes can be used under the same license as Clojure's Eclipse Public License 1.0

Preparing data

Log into Google Analytics (GA) and go to

Traffic Sources - All Traffic . Select date range or other options like

Show rows: according to your need. Then click

Export - CSV above Explorer tab.

Preparing software

You need to install the following software packages:

MongoDB

Go to

MongoDB Dowloads page, and install it.

Clojure and Incanter

Create a new project using lein. We use mediana project name in this post.

lein new mediana

Edit your project.clj like this example:

(defproject mediana "0.0.1"

:description "Mediana Loganis GA tool"

:dependencies [[org.clojure/clojure "1.3.0"]

[incanter "1.3.0" ]]

:main mediana.core

:aot :all

)

Download Clojure, Incanter and dependecies.

lein deps

Create a 00import2mongo.sh file into main project directory. This script removes extra chars from exported CSV file and imports it into MongoDB with a given database and collection name:

#!/bin/bash

# Clean up and import GA exported csv

# usage: 00import2mongo.sh ga-export.csv DB-name Collection-name

if [ "$1" != "" ]

then

# remove comments, empty lines, Day values, then remove slahes,percents,spaces,points from fieldnames

sed -e '/^#.*/d;/^$/d;/^Day\,/,$d' $1 | sed -e '1s/ \/ //g;1s/%//g;1s/ //g;1s/\.//g' | mongoimport -d $2 -c $3 --drop --headerline --type csv -

else

echo "not ok"

fi

core.clj

Edit src/mediana/core.clj file. We will use Incanter interactively in an Emacs swank clojure REPL session using M-x clojure-jack-in. If you do not have a configured Emacs environment, please visit

Emacs-Live which is easy to configure. In this post we use c5 database name for GA data. We use 1 namespace and 3 clj files. First core.clj that loads util.clj and desktop.clj. util.clj contains helper functions. desktop.clj contains a nopfn or No Operation function where we can evaluate clojure code.

(ns mediana.core

(:gen-class)

(:use (incanter io core stats charts datasets mongodb ))

(:require [clojure.java.io :as io]

[clojure.string :as string] )

(:require [clj-time.core ])

(:require [incanter.distributions :as dist])

(:use somnium.congomongo)

(:require clojure.java.shell)

(:refer-clojure)

)

(use 'somnium.congomongo)

(use 'incanter.mongodb)

(mongo! :db "c5")

(load "util")

(load "desktop")

; main

(defn -main [& args]

(prn "hello world")

)

util.clj

Here I prepared some helper functions. iof: index of an item in a collection, sreplace: string replace, parse-int, avg: average of a row, p2n: percent to number, s2n: string to number, t2s time string to seconds.

(in-ns 'mediana.core)

(defn iof

"Index of an item in a coll"

([item coll]

(iof item coll 0))

([item coll from-idx]

(loop [idx from-idx coll (seq (drop from-idx coll))]

(if coll (if (= item (first coll)) idx

(recur (inc idx) (next coll)))

-1))))

(defn sreplace

"Replace r in s srting to nil"

[s r]

(clojure.string/replace s (java.util.regex.Pattern/compile r) ""))

(defn parse-int

"Parse string into integer"

[s]

(Integer. (re-find #"\d+" s )))

(defn avg

"Calculate average of a row"

[row]

(/ (sum row) (nrow row)))

(defn p2n

"Percent string to number"

[s]

(let [sn (read-string (sreplace s ",|%"))]

(if (number? sn) (/ sn 100))))

(defn s2n

"String number to number"

[s]

(let [sn (read-string (sreplace s ",|%"))]

(if (number? sn) sn)))

(defn t2s

"Time string to seconds"

[s]

(let [tv (clojure.string/split s #":")

sec (if (= 3 (length tv))

(+ (* (parse-int (nth tv 0)) 3600)

(* (parse-int (nth tv 1)) 60)

(parse-int (nth tv 2)))

0)]

sec))

util.clj MongoDB functions

fdb: fetch database, fdr fetch data row, fdd: fetch distinct data

(defn fdb

"Fetch DataBase, an alias for mongo db query"

[& args]

(apply fetch-dataset args))

(defn fdr

"Fetch data row, query coll for only row"

[coll row]

($ row (fdb coll :only [row])))

(defn fdd

"Fetch distinct data, query coll for only distinct values of row"

[coll row]

(distinct-values coll (name row)))

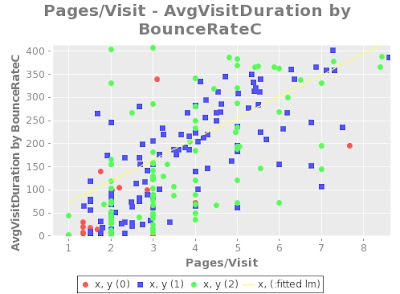

util.clj chart and weighted sort

lm-chart: scatter-plot chart with linear model, lm-gchart: lm-chart + group by variable, etv-ws: estimated value for weighted sort.

(defn lm-chart

"Linear model of x y in a chart"

[x y xl yl]

(def sp (scatter-plot x y

:title (str xl " " yl)

:x-label (str xl)

:y-label (str yl)))

(def lm (linear-model y x))

(add-lines sp x (:fitted lm))

(view sp))

(defn lm-gchart

"Linear model of x y in a chart groupped by g"

[x y xl yl g]

(def sp (scatter-plot x y

:group-by g

:legend true

:title (str xl " " yl)

:x-label (str xl)

:y-label (str yl) ))

(def lm (linear-model y x))

(add-lines sp x (:fitted lm))

(view sp))

(defn etv-ws

"Estimated weighted sort value for y based on x where mx maximum of Xs, ay average of Ys."

[x y mx ay]

(let [etv ($= (x / mx * y) + ((1 - (x / mx)) * ay ))]

etv))

util.clj init db

Read comments in source. First we call 00import2mongo.sh script that cleans and import GA csv into c5 database m1 collection. Then we parallel process imported collection into an integer/float only m2 collection using pmap.

(defn initdb [] "Initialize DB"

;; import GA exported csv into mongo :m1 collection using 00import2mongo shell script for csv cleaning before import

(prn (:out (clojure.java.shell/sh "/path_to_dir/mediana/00import2mongo.sh" "/path_to_dir/Analytics-All-Traffic-20121120-20121220.csv" "c5" "m1")))

;; drop :m2 processed collection

(drop-coll! :m2)

;; Distinct values for SourceMedium. A traffic source may occur several times in case of a secondary dimension selected for csv export

(def SourceMedium (fdd :m1 :SourceMedium))

;; process all object ids of :m1 coll

(let [oids ($ :_id (fdb :m1))]

(pmap

(fn [y]

(let [rec (fdb :m1 :where {:_id y})

;; SourceMedium as integer index

smi (iof ($ :SourceMedium rec) SourceMedium)

;; String to integer Number

vis (s2n ($ :Visits rec))

pav ($ :PagesVisit rec)

avs ($ :AvgVisitDuration rec)

;; Time to seconds as integer

avd (t2s avs)

;; Percent to float

nev (p2n ($ :NewVisits rec))

bor (p2n ($ :BounceRate rec))

;; store cleaned up values

log (insert! :m2 {:SourceMedium smi

:Visits vis

:PagesVisit pav

:AvgVisitDuration avd

:NewVisits nev

:BounceRate bor})

]

))

oids)))

util.clj classify-row

We use a simple classification: below average, average, above average using average and standard deviation. BounceRate is a negative metric: lower value means better.

(def negmets "Negative Metrics or lower value means better"

#{:BounceRate})

(defn classify-row

"Classify row to avg and sd of row in coll"

[coll row]

(let [;; all object ids

oids (fdr coll :_id)

;; get all data of row

rowdata (fdr coll row)

;; calc average of row

average (avg rowdata)

;; calc half os standard deviation for row data

halfsd (/ (sd rowdata) 2)

;; max and min values: avg +/- halfsd

maxv (+ average halfsd)

minv (- average halfsd)

]

;; parallel process object ids

(pmap

(fn [y]

(let [;; one object id

oid y

;; get full record of oid

rec (fetch-one coll :where {:_id oid})

;; get row value of record

yre (row rec)

;; classify

cla (;; if row is a negativ metric (like Bounce rate: the higher the worse

if (contains? negmets row)

;; Negative metric: max point when lowest

(cond (> yre maxv) 0

(< yre minv) 2

:else 1)

;; Positive metric: max point when highest

(cond (> yre maxv) 2

(< yre minv) 0

:else 1))

;; Merge classification value back to record

log (update! coll rec (merge rec

;; Add extra "C" char to the name of metric

{(keyword (str (name row) "C")) cla}))

]))

;; Object ids input

oids)))

util.clj weighted sort collection

This weighted sort function is similar to Google Analytics wieghted sort.

;; weighted sort weight calculation for a given row

(defn weighted-sort-row

"Calculate and store weighted sort value in coll using wrow as weight.

Store new weigted values in rowW name as new column"

[coll wrow row]

(let [oids (fdr coll :_id)

ydata (fdr coll row)

ay (avg ydata)

xdata (fdr coll wrow)

mx (reduce max xdata)

]

(pmap (fn [y]

(let [oid y

rec (fetch-one coll :where {:_id oid})

x (wrow rec)

y (row rec)

w (etv-ws x y mx ay)

log (update! coll rec (merge rec

{(keyword (str (name row) "W")) w}))

]))

oids)))

That is all for preparation. In my next post we turn to desktop.clj where we interactively explore real Google Analytics data using the above functions.